Solving SICP

This report is written as a post-mortem of a project that has, perhaps, been the author’s most extensive personal project: creating a complete and comprehensive solution to one of the most famous programming problem sets in the modern computer science curriculum “Structure and Interpretation of Computer Programs”, by Abelson, Sussman, and Sussman ([2]).

It measures exactly:

- How much effort SICP requires (729 hours 19 minutes (over eight months), 292 sessions).

- How many computer languages it involves (6).

- How many pieces of software are required (9).

- How much communication with peers is needed.

It suggests:

- A practical software-supported task management procedure for solving coursework.

- Several improvements, on the technical side, to any hard skills teaching process.

- Several improvements, on the social side, to any kind of teaching process.

The solution is published online (the source code and pdf file):

This report (and the data in the appendix) can be applied immediately as:

- A single-point estimate of the SICP problem set difficulty.

- A class handout aimed at increasing students’ motivation to study.

- A data source for a study of learning patterns among adult professionals aiming for continuing education.

- An “almost ready” protocol for a convenient problem-set solution procedure, which produces artefacts that can be later used as a student portfolio.

- An “almost ready”, and “almost convenient” protocol for measuring time consumption of almost any problem set expressible in a digital form.

Additionally, a time-tracking data analysis can be reproduced interactively in the org-mode version of this report. (See: Appendix: Emacs Lisp code for data analysis)

1. Introduction

Programming language textbooks are not a frequent object of study, as they are expected to convey existing knowledge. However, teaching practitioners, when they face the task of designing a computer science curriculum for their teaching institution, have to base their decisions on something. An “ad-hoc” teaching method, primarily based on studying some particular programming language fashionable at the time of selection, is still a popular choice.

There have been attempts to approach course design

with more rigour. The “Structure and Interpretation of Computer Programs” was created as a result of such an attempt. SICP was

revolutionary for its time, and perhaps can be still considered

revolutionary nowadays. Twenty years later, this endeavour was analysed by Felleisen in a paper “Structure and Interpretation of Computer Science Curriculum” ([14]). He then reflected upon the benefits and drawbacks of the deliberately designed syllabus from a pedagogical standpoint. He proposed what he believes to be a pedagogically superior successor to the first generation of deliberate curriculum. (See: “How to Design Programs” (HTDP) [15])

Leaving aside the pedagogical quality of the textbook (as the author is not a practising teacher), this report touches a different (and seldom considered!) aspect of a computer science (and in general, any other subject’s) curriculum. That is,precisely, how much work is required to pass a particular course.

This endeavour was spurred by the author’s previous experience of learning about partial differential equations through a traditional paper-and-pen based approach, only mildly augmented with a time-tracking software. But even such a tiny augmentation already exposed an astonishing disparity between a declared laboriousness of a task and the empirically measured time required to complete it.

The author, therefore, decided to build upon the previous experience and to try and design as smooth, manageable, and measurable approach to performing university coursework, as possible. A computer science subject provided an obvious choice.

The solution was planned, broken down into parts, harnessed with a software support system, and executed in a timely and measured manner by the author, thus proving that the chosen goal is doable. The complete measured data are provided. Teaching professionals may benefit from it when planning coursework specialised to their requirements.

More generally, the author wants to propose a comprehensive reassessment of university teaching in general, based on empirical approaches (understanding precisely how, when, and what each party involved in the teaching process does), in order to select the most efficient (potentially even using an optimisation algorithm) strategy when selecting a learning approach for every particular student.

2. Solution approach

The author wanted to provide a solution that would satisfy the following principles:

- Be complete.

- Be a reasonably realistic model of a solution process as if executed by the intended audience of the course – that is, freshman university students with little programming experience.

- Be done in a “fully digital”, “digitally native” form.

- Be measurable.

These principles need an explanation.

2.1. Completeness

2.1.1. Just solve all of the exercises

The author considers completeness to be an essential property of every execution of a teaching syllabus.

In simple words, what does it mean “to pass a course” or “to learn a subject” at all? How exactly can one formalise the statement “I know calculus”? Even simpler, what allows a student to say “I have learnt everything that was expected in a university course on calculus”?

It would be a good idea to survey teachers, students, employers, politicians and random members of the community to establish what it means for them that a person “knows a subject”.

Following are some potential answers to these questions:

- Passing an oral examination.

- Passing a written examination.

- Passing a project defence committee questioning.

- Completing a required number of continuous assessment (time-limited) tasks.

- Completing coursework.

- Attending a prescribed number of teaching sessions (lectures or tutorials).

- Reading a prescribed amount of prescribed reading material.

Any combination of these can also be chosen to signify the “mastering” of a subject, but the course designer is then met with a typical goal-attainment, multi-objective optimisation problem ([18]); such problems are still usually solved by reducing the multiple goals to a single, engineered goal.

Looking at the list above from a “Martian point of view” ([5]), we will see that all the goals listed above are reducible to a single “completing coursework” goal. “Completing coursework” is not reducible to any of those specific sub-goals in general, so the “engineered goal” may take the shape of a tree-structured problem set (task/subtask). “Engineered” tasks may include attending tutorials, watching videos and writing feedback.

Moreover, thinking realistically, doing coursework often is the only way that a working professional can study without altogether abandoning her job.

Therefore, choosing a computer science textbook that is known primarily for the problem set that comes with it, even more than for the actual text of the material, was a natural choice.

However, that is not enough, because even though “just solving all of the exercises” may be the most measurable and the most necessary learning outcome, is it sufficient?

As the author intended to “grasp the skill” rather than just “pass the exercises”, he initially considered inventing additional exercises to cover parts of the course material not covered by the original problem set.

For practical reasons (in order for the measured data to reflect the original book’s exercises), in the “reference solution” referred to in this report’s bibliography, the reader will not find exercises that are not a part of the original problem set.

The author, however, re-drew several figures from the book, representing those types of figures that are not required to be drawn by any of the exercises.

This was done in order to “be able to reproduce the material contained in the book from scratch at a later date”. This was done only for the cases for which the author considered the already available exercises insufficient. The additional figures did not demand a large enough amount of working time to change the total difficulty estimate noticeably.

2.1.2. A faithful imitation of the university experience

One common objection to the undertaken endeavour may be the following. In most universities (if not all), it is not necessary to solve all exercises in order to complete a course. This is often true, and especially true for mathematics-related courses (whose problem books usually contain several times more exercises than reasonably cover the course content). The author, however, considers SICP exercises not to be an example of such a problem set. The exercises cover the course material with minimal overlap, and the author even considered adding several more for the material that the exercises did not fully cover.

Another objection would be that a self-study experience cannot faithfully imitate a university experience at all because a university course contains tutorials and demonstrations as crucial elements. Problem-solving methods are “cooked” by teaching assistants and delivered to the students in a personalised manner in those tutorials.

This is indeed a valid argument. However, teaching assistants may not necessarily come from a relevant background; they are often recruited from an available pool and not explicitly trained. For such cases, the present report may serve as a crude estimate of the time needed for the teaching assistants to prepare for the tutorials.

Furthermore, many students choose not to attend classes at all either because they are over-confident, or due to high workload. For these groups, this report may serve similarly as a crude estimate.

Moreover, prior research suggests that the learning outcome effect of class attendance on the top quartile (by grade) of the students is low. ([9] and [21])

For the student groups that benefit most from tutorials, this report (if given as a recommended reading for the first lesson) may serve as additional evidence in favour of attendance.

Additionally, nothing seems to preclude recording videos of tutorials and providing them as a supplementary material at the subsequent deliveries of the course. The lack of interactivity may be compensated for by a large amount of the material (such as the video recordings of questions and answers) accumulated through many years and a well-functioning query system.

2.1.3. Meta-cognitive exercises

It is often underestimated how much imbalance there is between a teacher and a pupil. The teacher not only better knows the subject of study – which is expected– but is also deciding how and when a student is going to study. This is often overlooked by practitioners, who consider themselves simply as either as sources of knowledge or, even worse, as only the examiners. However, it is worth considering the whole effect that a teacher has on the student’s life. In particular, a student has no other choice than to trust the teacher on the choice of exercises. A student will likely mimic the teacher’s choice of tools used for the execution of a solution.

The main point of the previous paragraph is that teaching is not only the process of data transmission. It is also the process of metadata transmission, the development of meta-cognitive skills. (See [22]) Therefore, meta-cognitive challenges, although they may very well be valuable contributions to the student’s “thinking abilities”, deserve their own share of consideration when preparing a course.

Examples of meta-cognitive challenges include:

- Non-sequentiality of material and exercises, so that earlier exercises are impossible to solve without first solving later ones.

- The incompleteness of the treatise.

- The terseness of the narrative.

- Lack of modern software support.

- Missing difficulty/hardness estimation for tasks.

- The vastly non-uniform difficulty of the problems.

An additional challenge to the learning process is the lack of peer support. There have been attempts by learning institutions to encourage peer support among students, but the successfulness of those attempts is unclear. Do students really help each other in those artificially created support groups? Inevitably, communication in this those groups will not be limited only to the subject of study. To what extent does this side-communication affect the learners?

A support medium is even more critical for adult self-learners, who do not get even those artificial support groups created by the school functionaries and do not get access to teaching assistance.

It should be noted that the support medium (a group chat platform, or a mailing list) choice, no matter how irrelevant to the subject itself it may be, is a significant social factor.

This is not to say that a teacher should create a support group in whatever particular social medium that happens to be fashionable at the start of the course.

This is only to say that deliberate effort should be spent on finding the best support configuration.

In the author’s personal experience:

- The #scheme Freenode channel was used as a place to ask questions in real-time. #emacs was also useful.

- http://stackoverflow.com was used to ask asynchronous questions.

- The Scheme Community Wiki http://community.schemewiki.org was used as reference material.

- The author emailed some prominent members of the Scheme community with unsolicited questions.

- The author was reporting errors in the documents generated by the Scheme community process.

- The author was asking for help on the Chibi-Scheme mailing list.

- There was also some help from the Open Data Science Slack chat.

- There was also some help from the Closed-Circles data science community.

- There was also some help from the rulinux@confe\hyph{}rence.jabber.ru community.

- There was also some help from the Shanghai Linux User Group.

- There was also some help from the http://www.dxdy.ru scientific forum.

- There was also some help from the Haskell self-study group in Telegram.

It should be noted that out of those communities, only the Open Data Science community, and a small Haskell community reside in “fashionable” communication systems.

The summary of the community interaction is under the “meta-cognitive” exercises section because the skill of finding people who can help you with your problems is one of the most useful soft skills and one of the hardest to teach. Moreover, the very people who can and may answer questions are, in most situations, not at all obliged to do so, so soliciting an answer from non-deliberately-cooperating people is another cognitive exercise that is worth covering explicitly in a lecture.

Repeating the main point of the previous paragraph in other words: human communities consist of rude people. Naturally, no-one can force anyone to bear rudeness, but no-one can force anyone to be polite, either. The meta-cognitive skill of extracting valuable knowledge from willing but rude people is critical but seldom taught.

The author considers it vital to convey to students, as well as to teachers, the following idea: it is not the fashion, population, easy availability, promotion, and social acceptability of the support media that matters. Unfortunately, it is not even the technological sophistication, technological modernity or convenience; it is the availability of information and the availability of people who can help.

Support communication was measured by the following:

- Scheme-system related email threads in the official mailing list: 28.

- Editor/IDE related email threads + bug reports: 16.

- Presentation/formatting related email threads: 20.

- Syllabus related email threads: 3.

- Documentation related email threads (mostly obsolete link reports): 16.

- IRC chat messages: 2394 #scheme messages initiated by the author (the number obtained by simple filtering by the author’s nickname).

- Software packages re-uploaded to Software Forges: 2 (recovered from original authors’ personal archives).

The author did not collect measures of other communication means.

2.1.4. Figures to re-typeset

Several figures from SICP were re-drawn using a textual representation. The choice of figures was driven by the idea that someone who successfully completed the book should also be able to re-create the book material and therefore should know how to draw similar diagrams. Therefore, those were chosen to be representative of the kinds of figures not required to be drawn by any exercise.

The list of re-drawn figures:

- 1.1 Tree representation, showing the value of each sub-combination.

- 1.2 Procedural decomposition of the sqrt program.

- 1.3 A linear recursive process.

- 2.2 Box-and-pointer representation of

(cons 1 2). - 2.8 A solution to the eight-queens puzzle.

- 3.32 The integral procedure viewed as a signal-processing system.

- 3.36 An RLC circuit.

- 5.1 Data paths for a Register Machine.

- 5.2 Controller for a GCD Machine.

2.2. Behaviour modelling, reenactment and the choice of tools

2.2.2. The tools

The final choice of tools turned out to be the following:

- Chibi-Scheme

- as the scheme implementation

- srfi-159

- as a petty-printing tool

- srfi-27

- as a random bits library

- srfi-18

- as a threading library

- (chibi time)

- as a timing library

- (chibi ast)

- (not strictly necessary) macro expansion tool

- (chibi process)

- for calling ImageMagick

- GNU Emacs

- as the only IDE

- org-mode

- as the main editing mode and the main planning tool

- f90-mode

- as a low-level coding adaptor

- geiser

- turned out to be not ready for production use, but still useful for simple expressions evaluation

- magit

- as the most fashionable GUI for git

- gfortran

- as the low-level language

- PlantUML

- as the principal diagramming language

- TikZ + luaLaTeX

- as the secondary diagramming language

- Graphviz

- as a tertiary diagramming language

- ImageMagick

- as the engine behind the “picture language” chapter

- git

- as the main version control tool

- GNU diff, bash, grep

- as the tools for simple text manipulation

Chibi-Scheme was virtually the only scheme system claiming to fully support the latest Scheme standard, r7rs-large (Red Edition), so there was no other choice.

This is especially true when imagining a student unwilling to go deeper into the particular curiosities of various schools of thought, responsible for creating various partly-compliant Scheme systems.

Several libraries (three of which were standardised, and three of which were not) were used to ensure the completeness of the solution.

Effectively, it is not possible to solve all the exercises using only the standardised part of the Scheme language.

Even Scheme combined with standardised extensions is not enough.

However, only one non-standard library was strictly required: (chibi process), which served as a bridge between Scheme and the graphics toolkit.

git is not often taught in schools. The reasons may include the teachers’ unwillingness to busy themselves with something deemed trivial or impossible to get by without, or due to them being overloaded with work. However, practice often demonstrates that students still too often graduate without yet having a concept of file version control, which significantly hinders work efficiency. Git was chosen because it is, arguably, the most widely used version-control system.

ImageMagick turned out to be the easiest way to draw images consisting of simple straight lines.

There is still no standard way to connect Scheme applications to applications written in other languages.

Therefore, by the principle of minimal extension, ImageMagick was chosen, as it required just a single non-standard Scheme procedure.

Moreover, this procedure (a simple synchronous application call) is likely to be the most standard interoperability primitive invented.

Almost all operating systems support applications executing other applications.

PlantUML is a code-driven implementation of the international standard of software visualisation diagrams. The syntax is straightforward and well documented. The PlantUML-Emacs interface exists and is relatively reliable. The textual representation conveys the hacker spirit and supports easy version control. UML almost totally dominates the software visualisation market, and almost every university programming degree includes it to some extent. It seemed, therefore very natural (where the problem permitted) to solve the “diagramming” problems of the SICP with the industry-standard compliant diagrams.

Graphviz was used in an attempt to use another industry standard for solving diagramming problems not supported by the UML.

The dot package benefits from being fully machine-parsable and context-independent even more than UML. However, it turned out to be not as convenient as expected.

TikZ is practically the only general-purpose, code-driven drawing package. So, when neither UML nor Graphviz managed to embed the complexity of the models diagrammed properly, TikZ ended up being the only choice. Just as natural an approach could be to draw everything using a graphical tool, such as Inkscape or Adobe Illustrator. The first problem with the images generated by such tools, though, is that they are hard to manage under version control. The second problem is that it was desirable to keep all the product of the course in one digital artefact (i.e., one file). Single-file packaging would reduce confusion caused by the different versions of the same code, make searching more straightforward, and simplify the presentation to a potential examiner.

gfortran, or GNU Fortran, was the low-level language of choice for the last two problems in the problem set. The reasons for choosing this not very popular language were:

- The author already knew the C language, so compared to an imaginary first-year student, would have an undue advantage if using C.

- Fortran is low-level enough for the purposes of the book.

- There is a free/GPL implementation of Fortran.

- Fortran 90 already existed by the time SICP 2nd ed. was published.

GNU Unix Utilities the author did not originally intend to use these, but diff turned out to be extremely effective for illustrating the differences between generated code pieces in Chapter 5. Additionally, in some cases, they were used as a universal glue between different programs.

GNU Emacs is, de facto, the most popular IDE among Scheme users, the IDE used by the Free Software Foundation founders, likely the editor used when writing SICP, also likely to be chosen by an aspiring freshman to be the most “hacker-like” editor. It is, perhaps, the most controversial choice, as the most likely IDE to be used by freshmen university students, in general, would be Microsoft Visual Studio. Another popular option would be Dr.Racket, which packages a component dedicated to supporting solving SICP problems. However, Emacs turned out to be having the best support for a “generic Lisp” development, even though its support for Scheme is not as good as may be desired. The decisive victory point ended up being the org-mode (discussed later). Informally speaking, entirely buying into the Emacs platform ended up being a substantial mind-expanding experience. The learning curve is steep, however.

As mentioned above, the main point of this report is to supply the problem execution measures for public use. Later sections will elaborate on how data collection about the exercise completion was performed, using org-mode’s time-tracking facility. The time-tracking data in the section 8 do not include learning Emacs or org-mode. However, some data about these activities were collected nevertheless:

Reading the Emacs Lisp manual required 10 study sessions of total length 32 hours 40 minutes. Additional learning of Emacs without reading the manual required 59 hours 14 minutes.

2.3. Org-mode as a universal medium for reproducible research

Org-mode helps to resolve dependencies between exercises. SICP provides an additional challenge (meta-cognitive exercise) in that its problems are highly dependent on one another. As an example, problems from Chapter 5 require solutions to the successfully solved problems of Chapter 1. A standard practice of modern schools is to copy the code (or other forms of solution) and paste it into the solution of a dependent exercise. However, in the later parts of SICP, the solutions end up requiring tens of pieces of code written in the chapters before. Sheer copying would not just blow up the solution files immensely and make searching painful; it would also make it extremely hard to propagate the fixes to the bugs discovered by later usages back into the earlier solutions.

People familiar with the work of Donald Knuth will recognise the similarity of org-mode with his WEB system and its web2c implementation. Another commonly used WEB-like system is Jupyter Notebook (See [29]).

Org-mode helps package a complete student’s work into a single file. Imagine a case in which student needs to send his work to the teacher for examination. Every additional file that a student sends along with the code is a source of potential confusion. Even proper file naming, though it increases readability, requires significant concentration to enforce and demands that the teacher dig into peculiarities that will become irrelevant the very moment after he signs the work off. Things get worse when the teacher has not just to examine the student’s work, but also to test it (which is a typical situation with computer science exercises.)

Org-mode can be exported into a format convenient for later revisits. Another reason to carefully consider the solution format is the students’ future employability. This problem is not unfamiliar to the Arts majors, who have been collecting and arranging “portfolios” of their work for a long time. However, STEM students generally do not understand the importance of a portfolio. A prominent discussion topic in job interviews is, “What have you already done?”. Having a portfolio, in a form easily presentable during an interview, may be immensely helpful to the interviewee.

A potential employer is almost guaranteed not to have any software or equipment to run the former student’s code. Even the student himself would probably lack a carefully prepared working setup at the interview. Therefore, the graduation work should be “stored”, or “canned” in a format as portable and time-resistant as possible.

Unsurprisingly, the most portable and time-resistant format for practical use is plain white paper. Ideally, the solution (after being examined by a teacher) should be printable as a report. Additionally, the comparatively (in relation to the full size of SICP) small amount of work required to turn a solution that is “just enough to pass” into a readable report would be an emotional incentive for the students to carefully post-process their work. Naturally, “plain paper” is not a very manageable medium nowadays. The closest manageable approximation is PDF. So, the actual “source code” of a solution should be logically and consistently exportable into a PDF file. Org-mode can serve this purpose through the PDF export backend.

Org-mode has an almost unimaginable number of use cases. (For example, this report has been written in org-mode.) While the main benefits of using org-mode for the coursework formatting was the interactivity of code execution, and the possibility of export, another benefit that appeared almost for free was minimal-overhead time-tracking (human performance profiling.) Although this initially appeared as a by-product of choosing a specific tool, the measures collected with the aid of org-mode is the main contribution of this report.

The way org-mode particulars were used is described in the next section, along with the statistical summary.

2.4. Different problem types

SICP’s problems can be roughly classified into the following classes:

- Programming problems in Scheme without input.

- Programming problems in Scheme with input (possibly running other programs).

- Programming problems in Scheme with graphical output.

- Programming problems in a “low-level language of your choice”.

- Mathematical problems.

- Standard-fitting drawing exercises.

- Non-standard drawing exercises.

- Essays.

Wonderfully absent are the problems of the data analysis kind.

This section will explain how these classes of problem can be solved in a “single document mode”.

Essays is the most straightforward case. The student can just write the answer to the question below the heading corresponding to a problem. Org-mode provides several minimal formatting capabilities that are enough to cover all the use cases required.

Mathematical problems require that a \TeX-system be present on the student machine, and employ org-mode’s ability to embed \TeX’ mathematics, along with previews, directly into the text. The author ended up conducting almost zero pen-and-paper calculations while doing SICP’s mathematical exercises.

Programming exercises in Scheme are mostly easily formatted as org-mode “babel-blocks”, with the output being pasted directly into the document body, and updated as needed.

Programming exercises in Scheme with input require a little bit of effort to make them work correctly. It is sometimes not entirely obvious whether the input should be interpreted as verbatim text, or as executable code. Ultimately, it turned out to be possible to format all the input data as either “example” or “code” blocks, feed them into the recipient blocks via an “:stdin’’ block directive and present all the test cases (different inputs) and test results (corresponding outputs) in the same document.

Programming exercises in a low-level language required wrapping the low-level language code into “babel” blocks, and the result of combining those into a “shell” block. This introduces an operating system dependency. However, GNU Unix Utilities are widespread enough to consider this not a limitation.

Programming exercises with graphical output turned out to be the trickiest part from the software suite perspective. Eventually, a Scheme-system (chibi) dependent wrapper around the ImageMagick graphics manipulation tool was written. Org-mode has a special syntax for the inclusion of graphic files, so the exercise solutions were generating the image files and pasting the image inclusion code into the org buffer.

Standard drawing exercises illustrate a problem that is extremely widespread, but seldom well understood, perhaps because people aiming to solve it usually do not come from the programming community. Indeed, there are several standard visual conventions for industrial illustrations and diagramming, including UML, ArchiMate, SDL, and various others. Wherever a SICP figure admitted a standard-based representation, the author tried to use that standard to express the answer to the problem. The PlantUML code-driven diagramming tool was used most often, as its support for UML proved to be superior to the alternatives. The org-plantuml bridge made it possible to solve these problems in the manner similar to the coding problems – as “org-babel” blocks.

Non-standard drawing exercises, the most prominent of those requiring drawing environment diagrams (debugging interfaces), were significantly more challenging. When a prepared mental model (i.e. an established diagramming standard) was absent, that diagram had to be implemented from scratch in an improvised way. The TikZ language proved to have enough features to cover the requirements of the book where PlantUML was not enough. It required much reading of the manual and an appropriate level of familiarity with \TeX.

3. Time analysis, performance profiling and graphs

This section deals with explaining exactly how the working process was organised and later shows some aggregated work measures that have been collected.

3.1. Workflow details and profiling

The execution was performed in the following way:

At the start of the work, the outline-tree corresponding to the book subsection tree was created. Most leaves are two-state TODO-headings. (Some outline leaves correspond to sections without problems, and thus are not TODO-styled.)

TODO-heading is a special type of an org-mode heading, that exports its state (TODO/DONE) to a simple database, which allows monitoring of the overall TODO/DONE ratio of the document.

Intermediate levels are not TODO-headings, but they contain the field representing the total ratio of DONE problems in a subtree.

The top-level ratio is the total number of finished problems divided by the total number of problems.

An example of the outline looks the following:

* SICP [385/404]

** Chapter 1: Building abstractions ... [57/61]

*** DONE Exercise 1.1 Interpreter result

CLOSED: [2019-08-20 Tue 14:23]...

*** DONE Exercise 1.2 Prefix form

CLOSED: [2019-08-20 Tue 14:25]

#+begin_src scheme :exports both :results value

(/ (+ 5 4 (- 2 (- 3 (+ 6 (/ 4 5)))))

(* 3 (- 6 2) (- 2 7)))

#+end_src

#+RESULTS:

: -37/150

...

When work is clearly divided into parts and, for each unit, its completion status is self-evident, the visibility of completeness creates a sense of control in the student. The “degree of completeness of the whole project”, available at any moment, provides an important emotional experience of “getting close to the result with each completed exercise”.

Additional research is needed on how persistent this emotion is in students and how much it depends on the uneven distribution of difficulty or the total time consumption. There is, however, empirical evidence that even very imprecise, self-measured KPIs do positively affect the chance of reaching the goal. (See: [42])

From the author’s personal experience, uneven distribution of difficulties at the leaf-level tasks is a major demotivating factor. However, the real problems we find in daily life are not of consistent difficulty, and therefore managing an uneven distribution of difficulty is a critical meta-cognitive skill. Partitioning a large task into smaller ones (_not necessarily_ in the way suggested by the book) may be a way to tackle this problem. Traces of this approach are visible through the “reference” solution PDF.

The problems were executed almost sequentially. Work on the subsequent problem was started immediately after the previous problem had been finished.

Out of more than 350 exercises, only 13 were executed out of order (See section 3.2). Sequentiality of problems is essential for proper time accounting because the total time attributed to a problem is the sum of durations of all study sessions between the end of the problem considered and the end of the previous problem. It is not strictly required for the problem sequence to be identical to the sequence proposed by the book, but it is important that, if a problem is postponed, the study sessions corresponding to the initial attempt to solve this problem be somehow removed from the session log dataset.

In this report, study sessions corresponding to the initial attempts of solving out of order problems were simply ignored. This has not affected the overall duration measures much because those sessions were usually short.

Sequentiality is one of the weakest points of this report. It is generally hard to find motivation to work through a problem set sequentially. SICP does enforce sequentiality for a large share of problems by making the later problems depend on solutions of the previous ones, but this “dependence coverage” is not complete.

As the most straightforward workaround, the author may once again suggest dropping the initial attempts of solving the out-of-order problems from the data set entirely. This should be relatively easy to do because the student (arguably) is likely to decide whether to continue solving the problem or to postpone it within one study session. This study session may then be appropriately trimmed.

The author read the whole book before starting the project. The time to read the prose could also be included in project’s total time consumption, but the author decided against it. In fact, when approached from the viewpoint of completing the exercises, material given in the book appeared to have nothing in common with the perception created by only reading the text.

A deliberate effort was spent on avoiding closing a problem at the same time as closing the study session.

The reason for this is to exploit the well-known tricks (See: [3]):

- “When you have something left undone, it is easier to make yourself start the next session.”

- Even just reading out the description of a problem makes the reader start thinking about how to solve it.

The data come in two datasets, closely related.

Dataset 1: Exercise completion time was recorded using a standard org-mode closure time tracking mechanism. (See Appendix: Full data on the exercise completion times.) For every exercise, completion time was recorded as an org-mode time-stamp, with minute-scale precision.

Dataset 2: Study sessions were recorded in a separate org-mode file in the standard org-mode time interval standard (two time-stamps):

"BEGIN_TIME -- END_TIME".

(See Appendix: Full data on the study sessions.)

During each study session, the author tried to concentrate as much as possible, and to do only the activities related to the problem set. These are not limited to just writing the code and tuning the software setup. They include the whole “package” of activities leading to the declaration of the problem solved. These include, but are not limited to, reading or watching additional material, asking questions, fixing bugs in related software, and similar activities.

Several software problems were discovered in the process of making this solution. These problems were reported to the software authors. Several of those problems were fixed after a short time, thus allowing the author to continue with the solution. For a few of the problems, workarounds were found. None of the problems prevented full completion of the problem set.

The author found it very helpful to have a simple dependency resolution tool at his disposal. As has been mentioned above, SICP’s problems make heavy use of one another. It was therefore critical to find a way to re-use code within a single org-mode document. Indeed org’s WEB-like capabilities («noweb»-links) proved to be sufficient. Noweb-links is a method for verbatim inclusion of a code block into other code blocks. In particular, Exercise 5.48 required inclusion of 58 other code blocks into the final solution block. Pure copying would not suffice because SICP exercises often involve the evaluation of the code written before (in the previous exercises) by the code written during the execution of the current exercise. Therefore, later exercises are likely to expose errors in the earlier exercises’ solutions.

3.2. Out-of-order problems and other measures

The following figure presents some of the aggregated measurements on solving of the problem set.

- 729 hours total work duration.

- 2.184 hours mean time spent on solving one problem.

- 0.96 hours was required for the dataset median problem.

- 94.73 hours for the hardest problem: writing a Scheme interpreter in a low-level language.

- 652 study sessions.

- 1.79 study sessions per problem on average.

- >78000-lines long .org file (>2.6 megabytes) (5300 pages in a PDF).

- 1 median number of study sessions required to solve a single problem. The difference of almost 2 with the average hints that the few hardest problems required significantly more time than typical ones.

- 13 problems were solved out of order:

- “Figure 1.1 Tree representation…”

- “Exercise 1.3 Sum of squares.”

- “Exercise 1.9 Iterative or recursive?”

- “Exercise 2.45 Split.”

- “Exercise 3.69 Triples.”

- “Exercise 2.61 Sets as ordered lists.”

- “Exercise 4.49 Alyssa’s generator.”

- “Exercise 4.69 Great-grandchildren.”

- “Exercise 4.71 Louis’ simple queries.”

- “Exercise 4.79 Prolog environments.”

- “Figure 5.1 Data paths for a Register Machine.”

- “Exercise 5.17 Printing labels.”

- “Exercise 5.40 Maintaining a compile-time environment.”

Thirteen problems were solved out-of-order. This means that those problems may have been the trickiest (although not necessarily the hardest.)

3.3. Ten hardest problems by raw time

| Exercise | Days Spent | Spans Sessions | Minutes Spent |

|---|---|---|---|

Exercise 2.46 make-vect. |

2.578 | 5 | 535 |

| Exercise 4.78 Non-deterministic queries. | 0.867 | 6 | 602 |

| Exercise 3.28 Primitive or-gate. | 1.316 | 2 | 783 |

| Exercise 4.79 Prolog environments. | 4.285 | 5 | 940 |

| Exercise 3.9 Environment structures. | 21.030 | 10 | 1100 |

| Exercise 4.77 Lazy queries. | 4.129 | 9 | 1214 |

Exercise 4.5 cond with arrow. |

12.765 | 7 | 1252 |

| Exercise 5.52 Making a compiler for Scheme. | 22.975 | 13 | 2359 |

| Exercise 2.92 Add, mul for different variables. | 4.556 | 11 | 2404 |

| Exercise 5.51 EC-evaluator in low-level language. | 28.962 | 33 | 5684 |

It is hardly unexpected that writing a Scheme interpreter in a low-level language (Exercise 5.51) turned out to be the most time-consuming problem of all the problem set. After all, it required learning an entirely new language from scratch. In the author’s case, the low-level language happened to be Fortran 2018. Learning Fortran up to the level required is a relatively straightforward, albeit time-consuming.

Exercise 5.52, a compiler for Scheme, implicitly required that the previous exercise be solved already, as the runtime support code is shared between these two problems. All of the compiled EC-evaluator turned out to be just a single (very long) Fortran function.

Exercise 2.29 proves that it is possible to create significantly difficult exercises even without introducing the concept of mutation into the curriculum. This problem bears the comment from the SICP authors, “This is not easy!”. Indeed, the final solution contained more than eight hundred lines of code, involved designing an expression normalisation algorithm from scratch, and required twenty-five unit tests to ensure consistency. It is just a huge task.

Exercise 4.5 is probably one of those exercises that would benefit most from a Teaching Assistant’s help. In fact, the exercise itself is not that hard. The considerable workload comes from the fact that, in order to test that the solution is correct, a fully working interpreter is required. Therefore, this exercise, in fact, includes reading the whole of Chapter 4 and assembling the interpreter. Furthermore, the solution involves a lot of list manipulation, which is itself inherently error-prone if using only the functions already provided by SICP.

Exercise 4.77 required heavy modification of the codebase that had already been accumulated. It is likely to be the most architecture-intensive exercise of the book, apart from the exercise requiring a full rewrite of the backtracking engine of Prolog in a non-deterministic evaluator (Exercise 4.78). The code is very hard to implement incrementally, and the system is hardly testable until the last bit is finished. Furthermore, this exercise required the modification of the lowest-level data structures of the problem domain and modifying all the higher-level functions accordingly.

Exercise 4.79, is, in fact, an open-ended problem. The author considers it done, but the task is formulated so vaguely that it opens up an almost infinite range of possible solutions. This problem can hence consume any amount of time.

Exercise 3.9 required implementing a library for drawing environment diagrams. It may seem a trivial demand, as environment diagramming is an expected element of a decent debugger. However, the Scheme standard does not include many debugging capabilities. Debugging facilities differ among different Scheme implementation, but even those are usually not visual enough to generate the images required by the book. There exists an EnvDraw library (and its relatives), but the author failed to embed any of them into easily publishable Scheme code. It turned out to be more straightforward to implement drawing diagrams as TikZ pictures in embedded \LaTeX-blocks.

The time spent on Exercise 3.28 includes the assembly of the whole circuit simulation code into a working system. The time required actually to solve the problem was comparatively short.

The same can be said about Exercise 2.46, which required writing a bridge between a Scheme interpreter and a drawing system. The exercise itself is relatively easy.

To sum up this section, the most laborious exercises in the book are the ones that require a student to:

- implement language features that are “assumed to be given”;

- assemble scattered code fragments into a working program;

- solve problems that have little to no theoretical coverage in the book.

In total, the ten most challenging problems account for 280 hours of work which is more than a third of the full problem set workload.

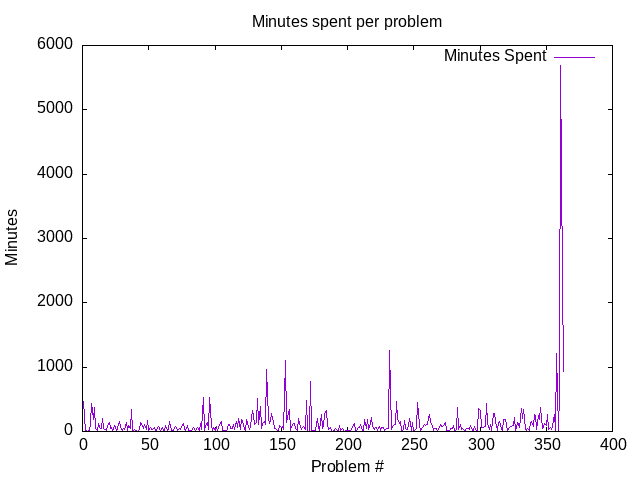

3.4. Minutes spent per problem

This graph is probably the most representative of the whole problem set. As expected, the last few problems turned out to be among the hardest. The second part of the course turned out to be more time-consuming than the first one.

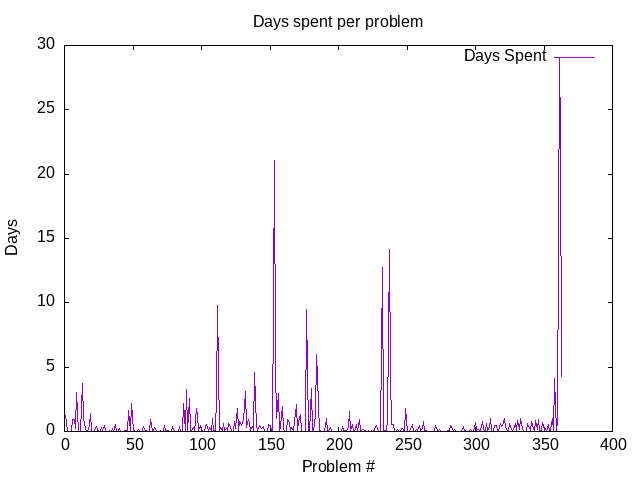

3.5. Days spent per problem

The figure depicts the number of days (Y-axis) a problem (enumerated by the X-axis coordinate) was loaded in the author’s brain. In simple words, it is the number of days that the state of “trying to solve a problem number X” spanned.

This measure is less justified than the “high concentration” time presented on the figure in the previous section. However, it may nevertheless be useful for encouraging students who get demotivated when spending a long “high concentration” session on a problem with no apparent success. Naturally, most (but not all) problems are solvable within one session (one day).

The second spike in the distribution can be attributed to general tiredness while solving such as huge problem set and a need for a break. The corresponding spike on the graph of the study sessions is less prominent.

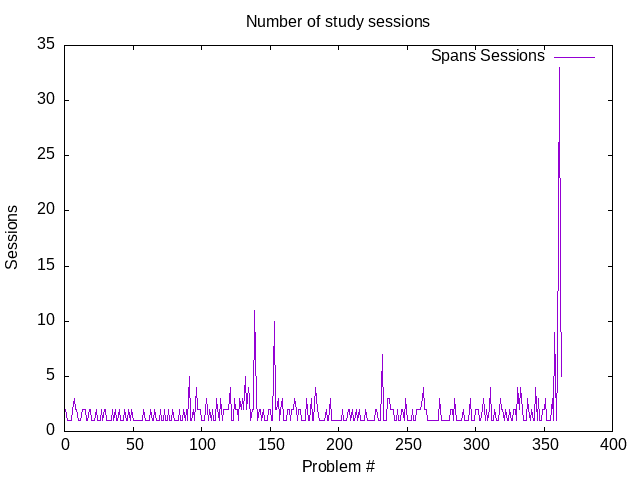

3.6. Study sessions per problem

A “session” may be defined as a period of high concentration when the student is actively trying to solve a problem and get the code (or essay) written. This graph presents the number of sessions (Y-axis) spent on each problem (enumerated by the X-axis), regardless of the session length.

When a student goes on a vacation, the problem, presumably, remains loaded in the student’s brain. However, periodic “assaults” in the form of study sessions may be necessary to feed the subconscious processing with the new data.

During vacation time, there should be a spike on the “days per problem” graph, but not the “sessions per problem graph”. This can be seen on the second spike in the “days per problem” graph, which has its counterpart on the “sessions per problem” graph. The counterpart is much shorter.

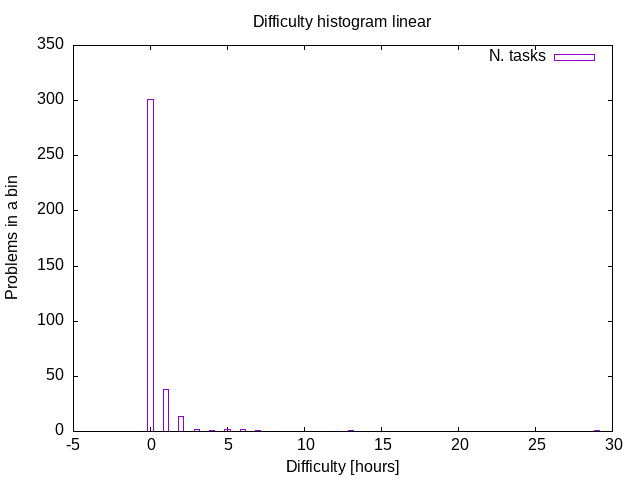

3.7. Difficulty histogram (linear)

The linearly-scaled difficulty histogram depicts how many problems (Y-axis) require up to “bin size” hours for solution. Naturally, most of the exercises are solvable within one to three hours.

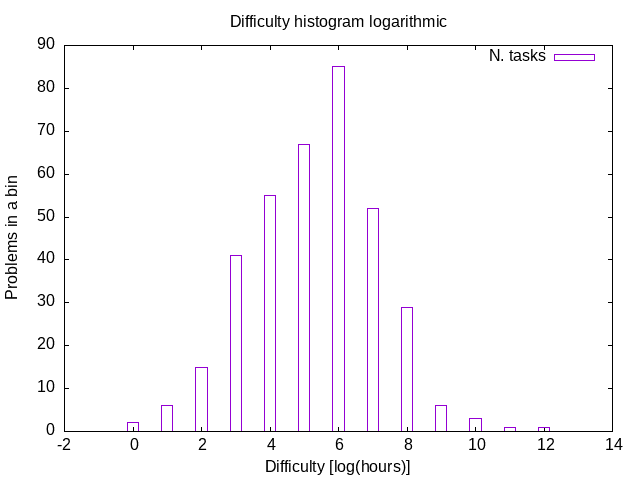

3.8. Difficulty histogram (logarithmic)

The logarithmically-scaled difficulty histogram depicts how many problems (Y-axis) require up to 2\textsuperscript{X} hours for solution. It is very interesting to observe that the histogram shape resembles a uni-modal distribution. It is hard to think of a theoretical foundation on which to base assumptions for the distribution law. Prior research, however, may imply that the distribution is log-normal. (See [10])

4. Conclusion and Further Work

4.1. Conclusion

As follows immediately from the introduction, this report is essentially a single-point estimate of the difficulty distribution of a university-level problem set.

As far as the author knows, this is the first such a complete difficulty breakdown of a university-level problem set in existence.

As has been mentioned in section 3.2, the complete execution of the problem set required 729 hours. In simple words, this is a very long time. If a standard working day is assumed to have the length of 8 hours, the complete solution would require 91 days, or 14 weeks, or 3.5 months.

In the preface to the second edition, the authors claim that a redacted version (e.g. dropping the logical programming part, the part dedicated to the implementation of the register machine simulator, and most of the compiler-related sections) of the course can be covered in one semester. This statement is in agreement with the numbers presented in this report. Nevertheless, as the teachers would probably not want to assign every problem in the book to the student, they would need to make a selection based on both the coverage of the course topics and the time required. The author hopes that this report can provide an insight into the difficulty aspect.

On the other hand, the author would instead recommend opting for a two-semester course. If several of the hardest problems (i.e. problems discussed in section 3.3) are left out, the course can be fitted into two 300-hour modules. Three hundred hours per semester-long course matches the author’s experience of studying partial differential equations at the Moscow Institute of Physics and Technology.

Another important consideration is the amount of time that instructors require to verify solutions and to write feedback for the students. It is reasonable to assume that marking the solutions and writing feedback would require the same amount of time (within an order of magnitude) as the amount needed to solve the problem set, since every problem solution would have to be visited by a marker at least once. For simplicity, the author assumes that writing feedback would require 72 hours per student.

This parameter would then be multiplied by the expected number of students per group, which may vary between institutions, but can be lower-bounded by 5. Therefore the rough estimate would be \(\mbox{const} \cdot 72 \cdot 5 \approx 360\) hours, or 45 full working days (2 months). This duration is hardly practicable for a lone teacher, even if broken down over two semesters. (Each requiring 180 hours.) On the other hand, if the primary teacher is allowed to hire additional staff for marking, the problem becomes manageable again. One of the applications of this report may be as supporting evidence for lead instructors (professors) asking their school administration for teaching assistants.

4.2. Further work

The field of difficulty assessment (especially with the computer-based tools) of university courses still offers a lot to investigate. As far as the author of this report knows, this is the first exhaustive difficulty assessment of a university course. (This is not to say that SICP has not been successfully solved in full before. Various solutions can be found on many well-known software forges.)

The first natural direction of research would then be expanding the same effort towards other problem sets and other subjects.

On the other hand, this report is just a single point estimate, and therefore extremely biased. It may be a significant contribution if the same problem set (or indeed parts or even single problems of it) be solved by different people following the same protocol.

The provision of the solution protocol, the software setup and the time-tracking procedure, is deemed by the author to be a contribution of this report.

Professors teaching such a course are encouraged to show this report to their students and to suggest executing the problem set required along the lines of the protocol given here.

Another research direction could be towards finding an optimal curriculum design beyond the areas covered by SICP. It should not be unexpected if the students decide not to advance further in the course as long as their personal difficulty assessment exceeds a certain unknown threshold. In other words, the author suspects that, at some point, the students may feel an emotion that may be expressed as, “I have been solving this for too long, and see little progress; I should stop.”

It would be interesting to measure such a threshold and to suggest curriculum design strategies that aim to minimise course drop-out. Such strategies may include attempts at hooking into students’ intrinsic motivation (and proper measurements of the execution process may provide an insight on where it is hidden), as well as better designing an extrinsic motivation toolset (e.g. finding better KPIs for rewards and penalties, and proper measures should be helpful in this approach as well).

It would be interesting to observe whether the students who follow the protocol (and see their progress after each session) are more or less likely to drop the course than those who do not. This could constitute a test of intrinsic motivation in line with the self-determination theory of Deci and Ryan (see [32]).

Another important direction may be the development and formalisation of coursework submission formats, in order to facilitate further collection of similar data on this or other problem sets.

4.3. Informal review

This section contains the author’s personal view on the problem set and the questions it raises.

The author (Vladimir Nikishkin), enjoyed doing it. On the other hand, it is hard to believe that teaching this course to first-year undergraduate students can easily be made successful. It is unlikely that a real-world student can dedicate seven hundred hours to a single subject, even if the subject is broken down into two semesters without significant support (the more so, recalling that 25 years has passed since the second edition was released, during which time the world of programming has expanded enormously.) Even if such a student is found, he would probably have other subjects in the semester, as well as the need to attend classes and demonstrations.

Admittedly, out of almost four hundred exercises, the author cannot find a single superfluous one. Even more, the author had to add some extra activities in order to cover several topics better. Every exercise teaches some valuable concept and nudges the student into thinking more deeply.

The course could have been improved in the area of garbage collection and other memory management topics.

Indeed, the main cons-memory garbage collector is explained with sufficient detail to implement it, but several other parts of the interpreter memory model are left without explanation. Very little is said about efficiently storing numbers, strings and other objects.

There is not very much information about a rational process of software development. While this is not fundamental knowledge, but it would be helpful to undergraduates.

The last two exercises amount to one-fifth of the whole work. It was entirely unexpected to see a task to be completed in a language other than Scheme after having already finished most of the exercises.

Probably the biggest drawback of the book is the absence of any conclusion. Indeed, the book points the reader’s attention into various directions by means of an extensive bibliography. However, the author, as a willing student, would like to see a narrativised overview of the possible future directions.

4.4. Informal recommendations

If the author may, by virtue of personally experiencing this transformative experience, give a few suggestions to university curriculum designers, they would be the following:

- Deliberately teach students to use TeX, and especially well technically harnessed TeX (using a professional text editor, additional supportive software, such as syntax checkers, linters, and documentation lookup systems).

This is often considered to be a meta-cognitive exercise to be solved by the students, but the author’s personal experience is not reassuring in this aspect. Very few students, and even professionals, use TeX efficiently. It took more than 50 hours just to refresh the skill of using \TeX{} that the author had already learnt, in order to write a thesis.

- Deliberately teach students to touch-type. This may not be necessary in the regions where touch-typing is included in the standard high school curriculum, but poor touch-typing skills are still a major problem in most parts of the world.

- Deliberately teach students to read software manuals. Indeed, much modern software has manuals built-in piece-wise right into the software itself. Often reading the whole manual is not required to perform the task. However, doing the reading at least once (i.e. reading

somemanual from the first page to the last), is a very enlightening experience, and additionally useful in teaching how to assess the time needed to grasp the skill of usingapiece of software. As a by-product, this experience may help the students to write better manuals for their own software. - Teach students to use a timer when doing homework, even if it is not an org-mode timer. A realistic assessment of how much effort things actually take is a paradigm-shifting experience.

- When writing a book on any subject, start from designing exercises, and afterwards write the text that helps to develop the skills required to solve those. Reading SICP without doing the exercises proved to be almost useless for this project, which was done two years after the first reading.

- Consider introducing elements of industrial illustration standards (UML, ArchiMate) into the teaching flow of an introductory programming course. Courses created to deliberately cover these standards typically suffer from being disconnected from the problem domain. (Few people would like to draw a yet another model of an ATM machine.) Introductory programming provides a surrogate domain that can be mapped onto the diagrams relatively easily and is unlikely to cause rejection.

5. Materials

This section attempts to provide a complete list of materials used in the process of the problem set solution. It is not to be confused with the list of materials used in the preparation of this Experience Report.

5.1. Books

- Structure and Interpretation of Computer Programs 2nd Ed. ([2])

- Structure and Interpretation of Computer Programs 1st Ed. ([1])

- Modern Fortran Explained 2018. ([24])

- Revised\(^7\) Report on Algorithmic Language Scheme. ([34])

- Logic Programming: A Classified Bibliography. ([4])

- Chibi-Scheme Manual. ([33])

- TikZ Manual. ([39])

- PlantUML Manual. ([27])

- UML Weekend Crash Course. ([26])

- GNU Emacs Manual. ([38])

- GNU Emacs Lisp Reference Manual. ([37])

- GNU Emacs Org-Mode Manual. ([11])

- Debugging With GDB. ([36])

- Implementations of Prolog. ([6])

5.2. Software

5.3. Papers

- Revised Report on the Propagator Model. ([30])

- On Implementing Prolog In Functional Programming. ([8])

- eu-Prolog, Reference Manual. ([20])

References

| [1] | Harold Abelson and Gerald J. Sussman. Structure and Interpretation of Computer Programs. MIT Press, 1 edition, 1985. [ bib ] |

| [2] | Harold Abelson, Gerald J. Sussman, and Julia Sussman. Structure and Interpretation of Computer Programs. MIT Press, 2 edition, 1996. [ bib ] |

| [3] | Dan L. Adler and Jacob S. Kounin. Some factors operating at the moment of resumption of interrupted tasks. 7(2):255--267. [ bib | DOI | http ] |

| [4] | Isaac Balbin and Koenraad Lecot. Logic Programming. Springer Netherlands, 1985. [ bib | DOI | http ] |

| [5] | Eric Berne. What Do You Say After You Say Hello? Bantam Books, New York, 1973. [ bib ] |

| [6] | John A. Campbell, editor. Implementations of Prolog. Ellis Horwood/Halsted Press/Wiley, 1984. [ bib ] |

| [7] | Taylor Campbell et al. MIT/GNU Scheme, 2019. [ bib | http ] |

| [8] | Mats Carlsson. On implementing Prolog in functional programming. 2(4):347--359, 1984. [ bib | DOI | http ] |

| [9] | Karen L. St. Clair. A case against compulsory class attendance policies in higher education. 23(3):171--180, 1999. [ bib | DOI | http ] |

| [10] | Edwin L. Crow and Kunio Shimizu. Lognormal Distributions: Theory and Applications. Routledge, 5 2018. [ bib | DOI | http ] |

| [11] | Carsten Dominik. The Org-Mode 7 Reference Manual: Organize Your Life with GNU Emacs. Network Theory, UK, 2010. with contributions by David O'Toole, Bastien Guerry, Philip Rooke, Dan Davison, Eric Schulte, and Thomas Dye. [ bib ] |

| [12] | Carsten Dominik et al. Org-mode, 2019. [ bib | http ] |

| [13] | John Ellson et al. Graphviz. [ bib | http ] |

| [14] | Matthias Felleisen, Robert Bruce Findler, Matthew Flatt, and Shriram Krishnamurthi. The structure and interpretation of the computer science curriculum. 14:365--378, 07 2004. [ bib | DOI ] |

| [15] | Matthias Felleisen, Robert Bruce Findler, Matthew Flatt, and Shriram Krishnamurthi. How to Design Programs: an Introduction to Programming and Computing. The MIT Press, Cambridge, Massachusetts, 2018. [ bib ] |

| [16] | Free Software Foundation. GNU Emacs, 2019. [ bib | http ] |

| [17] | Free Software Foundation. GNU debugger, 2020. [ bib | http ] |

| [18] | Floyd W. Gembicki and Yacov Y. Haimes. Approach to performance and sensitivity multiobjective optimization: The goal attainment method. 20(6):769--771, December 1975. [ bib | DOI | http ] |

| [19] | Martin Hlosta, Drahomira Herrmannova, Lucie Vachova, Jakub Kuzilek, Zdenek Zdrahal, and Annika Wolff. Modelling student online behaviour in a virtual learning environment. 2018. [ bib ] |

| [20] | Eugene Kohlbecker. eu-Prolog, reference manual and report. Technical report, University of Indiana (Bloomington), Computer Science Department, 04 1984. [ bib ] |

| [21] | E. W. Kooker. Changes in grade distributions associated with changes in class attendance policies. 13:56--57, 1976. [ bib ] |

| [22] | Kelly Y. L. Ku and Irene T. Ho. Metacognitive strategies that enhance critical thinking. 5(3):251--267, July 2010. [ bib | DOI | http ] |

| [23] | Douglas McGregor. Theory X and theory Y. 358:374, 1960. [ bib ] |

| [24] | Michael Metcalf, John Reid, and Malcolm Cohen. Modern Fortran Explained. Oxford University Press, 10 2018. [ bib | DOI | http ] |

| [25] | Vladimir Nikishkin. A full solution to the structure and interpretation of computer programs. [ bib | http ] |

| [26] | Thomas Pender. UML Weekend Crash Course. Hungry Minds, Indianapolis, IN, 2002. [ bib ] |

| [27] | PlantUML Developers. Drawing UML with plantuml. [ bib | http ] |

| [28] | PlantUML Developers. PlantUML. [ bib | http ] |

| [29] | Project Jupyter Developers. Jupyter Notebook: a server-client application that allows editing and running notebook documents via a web browser., 2019. [ bib | http ] |

| [30] | Alexey Radul and Gerald J. Sussman. Revised report on the propagator model. [ bib | http ] |

| [31] | Jose A. O. Ruiz et al. geiser, 2020. [ bib | .html ] |

| [32] | Richard M Ryan and Edward L Deci. Self-determination theory: Basic psychological needs in motivation, development, and wellness. Guilford Publications, 2017. [ bib ] |

| [33] | Alex Shinn. Chibi-Scheme. [ bib | http ] |

| [34] | Alex Shinn, John Cowan, Arthur A. Gleckler, et al., editors. Revised7 Report on the Algorithmic Language Scheme. 2013. [ bib | http ] |

| [35] | Alex Shinn et al. Chibi-Scheme, 2019. [ bib | http ] |

| [36] | Richard Stallman et al. Debugging with GDB, 2020. [ bib | .html ] |

| [37] | Richard Stallman et al. GNU Emacs Lisp Reference Manual, 2020. [ bib | .pdf ] |

| [38] | Richard Stallman et al. GNU Emacs Manual, 2020. [ bib | .pdf ] |

| [39] | Till Tantau. The TikZ and PGF Packages. [ bib | .pdf ] |

| [40] | Till Tantau et al. Portable graphics format. [ bib | http ] |

| [41] | TeX User Groups. TeX Live, 2019. [ bib | http ] |

| [42] | Jeffrey J. VanWormer, Simone A. French, Mark A. Pereira, and Ericka M. Welsh. The impact of regular self-weighing on weight management: A systematic literature review. 5(1):54, 2008. [ bib | DOI | http ] |

| [43] | Patric Volkerding et al. Slackware Linux, 2019. [ bib | http ] |

6. Appendix: Analysed data on problem difficulty

For the code used to generate the tables in the following sections, see: Appendix: Emacs Lisp code for data analysis.

6.1. Analysed time consumption

| No | Exercise Name | Days Spent | Spans Sessions | Minutes Spent |

|---|---|---|---|---|

| 1 | Exercise 1.1 Interpreter result | 1.211 | 2 | 459 |

| 2 | Exercise 1.2 Prefix form | 0.001 | 1 | 2 |

| 3 | Figure 1.1 Tree representation, showing the value of each su | 0.007 | 1 | 10 |

| 4 | Exercise 1.4 Compound expressions | 0.003 | 1 | 4 |

| 5 | Exercise 1.5 Ben’s test | 0.008 | 1 | 11 |

| 6 | Exercise 1.6 If is a special form | 0.969 | 2 | 118 |

| 7 | Exercise 1.7 Good enough? | 0.949 | 3 | 436 |

| 8 | Exercise 1.8 Newton’s method | 0.197 | 2 | 193 |

| 9 | Exercise 1.10 Ackermann’s function | 3.038 | 2 | 379 |

| 10 | Exercise 1.11 Recursive vs iterative | 0.037 | 1 | 54 |

| 11 | Exercise 1.12 Recursive Pascal’s triangle | 0.012 | 1 | 17 |

| 12 | Exercise 1.13 Fibonacci | 0.092 | 1 | 132 |

| 13 | Exercise 1.9 Iterative or recursive? | 3.722 | 2 | 65 |

| 14 | Exercise 1.14 count-change | 1.038 | 2 | 50 |

| 15 | Exercise 1.15 sine | 0.267 | 2 | 195 |

| 16 | Exercise 1.16 Iterative exponentiation | 0.032 | 1 | 46 |

| 17 | Exercise 1.17 Fast multiplication | 0.019 | 1 | 28 |

| 18 | Exercise 1.18 Iterative multiplication | 0.497 | 2 | 23 |

| 19 | Exercise 1.19 Logarithmic Fibonacci | 1.374 | 2 | 93 |

| 20 | Exercise 1.20 GCD applicative vs normal | 0.099 | 1 | 142 |

| 21 | Exercise 1.21 smallest-divisor | 0.027 | 1 | 39 |

| 22 | Exercise 1.22 timed-prime-test | 0.042 | 1 | 61 |

| 23 | Exercise 1.23 (next test-divisor) | 0.383 | 2 | 5 |

| 24 | Exercise 1.24 Fermat method | 0.067 | 1 | 96 |

| 25 | Exercise 1.25 expmod | 0.051 | 1 | 74 |

| 26 | Exercise 1.26 square vs mul | 0.003 | 1 | 4 |

| 27 | Exercise 1.27 Carmichael numbers | 0.333 | 2 | 102 |

| 28 | Exercise 1.28 Miller-Rabin | 0.110 | 1 | 158 |

| 29 | Exercise 1.29 Simpson’s integral | 0.464 | 2 | 68 |

| 30 | Exercise 1.30 Iterative sum | 0.030 | 2 | 10 |

| 31 | Exercise 1.31 Product | 0.028 | 1 | 40 |

| 32 | Exercise 1.32 Accumulator | 0.017 | 1 | 24 |

| 33 | Exercise 1.33 filtered-accumulate | 0.092 | 1 | 133 |

| 34 | Exercise 1.34 lambda | 0.006 | 1 | 8 |

| 35 | Exercise 1.35 fixed-point | 0.265 | 2 | 87 |

| 36 | Exercise 1.36 fixed-point-with-dampening | 0.035 | 1 | 50 |

| 37 | Exercise 1.37 cont-frac | 0.569 | 2 | 348 |

| 38 | Exercise 1.38 euler constant | 0.000 | 1 | 0 |

| 39 | Exercise 1.39 tan-cf | 0.025 | 1 | 36 |

| 40 | Exercise 1.40 newtons-method | 0.205 | 2 | 6 |

| 41 | Exercise 1.41 double-double | 0.010 | 1 | 15 |

| 42 | Exercise 1.42 compose | 0.004 | 1 | 6 |

| 43 | Exercise 1.43 repeated | 0.019 | 1 | 27 |

| 44 | Exercise 1.44 smoothing | 0.099 | 2 | 142 |

| 45 | Exercise 1.45 nth-root | 0.056 | 1 | 80 |

| 46 | Exercise 1.46 iterative-improve | 0.033 | 1 | 48 |

| 47 | Exercise 2.1 make-rat | 1.608 | 2 | 109 |

| 48 | Exercise 2.2 make-segment | 0.024 | 1 | 34 |

| 49 | Exercise 2.3 make-rectangle | 2.183 | 2 | 174 |

| 50 | Exercise 2.4 cons-lambda | 0.007 | 1 | 10 |

| 51 | Exercise 2.5 cons-pow | 0.041 | 1 | 59 |

| 52 | Exercise 2.6 Church Numerals | 0.024 | 1 | 34 |

| 53 | Exercise 2.7 make-interval | 0.019 | 1 | 28 |

| 54 | Exercise 2.8 sub-interval | 0.124 | 1 | 58 |

| 55 | Exercise 2.9 interval-width | 0.006 | 1 | 8 |

| 56 | Exercise 2.10 div-interval-better | 0.010 | 1 | 15 |

| 57 | Exercise 2.11 mul-interval-nine-cases | 0.052 | 1 | 75 |

| 58 | Exercise 2.12 make-center-percent | 0.393 | 2 | 43 |

| 59 | Exercise 2.13 formula for tolerance | 0.003 | 1 | 5 |

| 60 | Exercise 2.14 parallel-resistors | 0.047 | 1 | 68 |

| 61 | Exercise 2.15 better-intervals | 0.007 | 1 | 10 |

| 62 | Exercise 2.16 interval-arithmetic | 0.002 | 1 | 3 |

| 63 | Exercise 2.17 last-pair | 0.966 | 2 | 89 |

| 64 | Exercise 2.18 reverse | 0.006 | 1 | 9 |

| 65 | Exercise 2.19 coin-values | 0.021 | 1 | 30 |

| 66 | Exercise 2.20 dotted-tail notation | 0.311 | 2 | 156 |

| 67 | Exercise 2.21 map-square-list | 0.013 | 1 | 19 |

| 68 | Exercise 2.22 wrong list order | 0.007 | 1 | 10 |

| 69 | Exercise 2.23 for-each | 0.006 | 1 | 9 |

| 70 | Exercise 2.24 list-plot-result | 0.111 | 2 | 75 |

| 71 | Exercise 2.25 caddr | 0.037 | 1 | 54 |

| 72 | Exercise 2.26 append cons list | 0.011 | 1 | 16 |

| 73 | Exercise 2.27 deep-reverse | 0.433 | 2 | 40 |

| 74 | Exercise 2.28 fringe | 0.026 | 1 | 37 |

| 75 | Exercise 2.29 mobile | 0.058 | 1 | 83 |

| 76 | Exercise 2.30 square-tree | 0.100 | 2 | 122 |

| 77 | Exercise 2.31 tree-map square tree | 0.019 | 1 | 27 |

| 78 | Exercise 2.32 subsets | 0.010 | 1 | 15 |

| 79 | Exercise 2.33 map-append-length | 0.375 | 2 | 96 |

| 80 | Exercise 2.34 horners-rule | 0.006 | 1 | 8 |

| 81 | Exercise 2.35 count-leaves-accumulate | 0.011 | 1 | 16 |

| 82 | Exercise 2.36 accumulate-n | 0.006 | 1 | 9 |

| 83 | Exercise 2.37 matrix-*-vector | 0.017 | 1 | 24 |

| 84 | Exercise 2.38 fold-left | 0.372 | 2 | 65 |

| 85 | Exercise 2.39 reverse fold-right fold-left | 0.005 | 1 | 7 |

| 86 | Exercise 2.40 unique-pairs | 0.029 | 1 | 42 |

| 87 | Exercise 2.41 triple-sum | 2.195 | 2 | 57 |

| 88 | Figure 2.8 A solution to the eight-queens puzzle. | 0.001 | 1 | 2 |

| 89 | Exercise 2.42 k-queens | 3.299 | 2 | 122 |

| 90 | Exercise 2.43 slow k-queens | 0.019 | 1 | 28 |

| 91 | Exercise 2.46 make-vect | 2.578 | 5 | 535 |

| 92 | Exercise 2.47 make-frame | 0.083 | 1 | 10 |

| 93 | Exercise 2.48 make-segment | 0.054 | 1 | 78 |

| 94 | Exercise 2.49 segments->painter applications | 0.294 | 2 | 139 |

| 95 | Exercise 2.50 flip-horiz and rotate270 and rotate180 | 0.019 | 1 | 27 |

| 96 | Exercise 2.51 below | 1.801 | 4 | 524 |

| 97 | Exercise 2.44 up-split | 1.169 | 2 | 89 |

| 98 | Exercise 2.45 split | 0.113 | 2 | 23 |

| 99 | Exercise 2.52 modify square-limit | 0.450 | 2 | 58 |

| 100 | Exercise 2.53 quote introduction | 0.008 | 1 | 11 |

| 101 | Exercise 2.54 equal? implementation | 0.050 | 1 | 72 |

| 102 | Exercise 2.55 quote quote | 0.000 | 1 | 0 |

| 103 | Exercise 2.56 differentiation-exponentiation | 0.393 | 2 | 65 |

| 104 | Exercise 2.57 differentiate-three-sum | 0.560 | 3 | 147 |

| 105 | Exercise 2.58 infix-notation | 0.112 | 1 | 161 |

| 106 | Exercise 2.59 union-set | 0.277 | 2 | 6 |

| 107 | Exercise 2.60 duplicate-set | 0.012 | 1 | 17 |

| 108 | Exercise 2.62 ordered-union-set (ordered list) | 0.973 | 2 | 14 |

| 109 | Exercise 2.61 sets as ordered lists | 0.004 | 1 | 6 |

| 110 | Exercise 2.63 tree->list (binary search tree) | 0.078 | 1 | 113 |

| 111 | Exercise 2.64 balanced-tree | 2.740 | 3 | 106 |

| 112 | Exercise 2.65 tree-union-set | 9.785 | 2 | 47 |

| 113 | Exercise 2.66 tree-lookup | 0.035 | 1 | 50 |

| 114 | Exercise 2.67 Huffman decode a simple message | 0.303 | 3 | 108 |

| 115 | Exercise 2.68 Huffman encode a simple message | 0.023 | 1 | 33 |

| 116 | Exercise 2.69 Generate Huffman tree | 0.608 | 2 | 160 |

| 117 | Exercise 2.70 Generate a tree and encode a song | 0.072 | 2 | 57 |

| 118 | Exercise 2.71 Huffman tree for frequencies 5 and 10 | 0.258 | 2 | 202 |

| 119 | Exercise 2.72 Huffman order of growth | 0.050 | 2 | 26 |

| 120 | Exercise 2.73 data-driven-deriv | 0.605 | 2 | 189 |

| 121 | Exercise 2.74 Insatiable Enterprises | 0.410 | 4 | 171 |

| 122 | Exercise 2.75 make-from-mag-ang message passing | 0.019 | 1 | 28 |

| 123 | Exercise 2.76 types or functions? | 0.003 | 1 | 5 |

| 124 | Exercise 2.77 generic-algebra-magnitude | 0.772 | 3 | 190 |

| 125 | Exercise 2.78 Ordinary numbers for Scheme | 0.212 | 2 | 67 |

| 126 | Exercise 2.79 generic-equality | 1.786 | 2 | 28 |

| 127 | Exercise 2.80 Generic arithmetic zero? | 0.056 | 1 | 80 |

| 128 | Exercise 2.81 coercion to-itself | 0.749 | 3 | 330 |

| 129 | Exercise 2.82 three-argument-coercion | 0.433 | 2 | 230 |

| 130 | Exercise 2.83 Numeric Tower and (raise) | 0.717 | 3 | 116 |

| 131 | Exercise 2.84 Using raise (raise-type) in apply-generic |

0.865 | 2 | 135 |

| 132 | Exercise 2.85 Dropping a type | 3.089 | 5 | 507 |

| 133 | Exercise 2.86 Compound complex numbers | 0.274 | 2 | 108 |

| 134 | Exercise 2.87 Generalized zero? | 0.919 | 4 | 389 |

| 135 | Exercise 2.88 Subtraction of polynomials | 0.646 | 3 | 50 |

| 136 | Exercise 2.89 Dense term-lists | 0.083 | 1 | 120 |

| 137 | Exercise 2.90 Implementing dense polynomials as a separate p | 0.400 | 2 | 148 |

| 138 | Exercise 2.91 Division of polynomials | 0.111 | 2 | 103 |

| 139 | Exercise 2.92 Ordering of variables so that addition and mul | 4.556 | 11 | 964 |

| 140 | Exercise 2.93 Rational polynomials | 0.378 | 3 | 198 |

| 141 | Exercise 2.94 Greatest-common-divisor for polynomials | 0.091 | 1 | 131 |

| 142 | Exercise 2.95 Illustrate the non-integer problem | 0.450 | 2 | 149 |

| 143 | Exercise 2.96 Integerizing factor | 0.325 | 2 | 275 |

| 144 | Exercise 2.97 Reduction of polynomials | 0.201 | 1 | 140 |

| 145 | Exercise 3.1 accumulators | 0.425 | 2 | 53 |

| 146 | Exercise 3.2 make-monitored | 0.027 | 1 | 39 |

| 147 | Exercise 3.3 password protection | 0.010 | 1 | 14 |

| 148 | Exercise 3.4 call-the-cops | 0.010 | 1 | 15 |

| 149 | Exercise 3.5 Monte-Carlo | 0.528 | 2 | 98 |

| 150 | Exercise 3.6 reset a prng | 0.479 | 2 | 68 |

| 151 | Exercise 3.7 Joint accounts | 0.059 | 1 | 85 |

| 152 | Exercise 3.8 Right-to-left vs Left-to-right | 0.026 | 1 | 38 |

| 153 | Exercise 3.9 Environment structures | 21.030 | 10 | 1100 |

| 154 | Exercise 3.10 Using let to create state variables |

4.933 | 2 | 138 |

| 155 | Exercise 3.11 Internal definitions | 0.994 | 2 | 219 |

| 156 | Exercise 3.12 Drawing append! |

2.966 | 3 | 347 |

| 157 | Exercise 3.13 make-cycle |

0.010 | 1 | 14 |

| 158 | Exercise 3.14 mystery |

0.385 | 2 | 77 |

| 159 | Exercise 3.15 set-to-wow! |

1.942 | 3 | 117 |

| 160 | Exercise 3.16 count-pairs |

0.171 | 1 | 118 |

| 161 | Exercise 3.17 Real count-pairs |

0.029 | 1 | 42 |

| 162 | Exercise 3.18 Finding cycles | 0.012 | 1 | 17 |

| 163 | Exercise 3.19 Efficient finding cycles | 0.934 | 2 | 205 |

| 164 | Exercise 3.20 Procedural set-car! |

0.633 | 2 | 121 |

| 165 | Exercise 3.21 queues | 0.021 | 1 | 30 |

| 166 | Exercise 3.22 procedural queue | 0.294 | 2 | 67 |

| 167 | Exercise 3.23 dequeue | 0.049 | 2 | 71 |

| 168 | Exercise 3.24 tolerant tables | 0.780 | 3 | 33 |

| 169 | Exercise 3.25 multilevel tables | 2.103 | 2 | 486 |

| 170 | Exercise 3.26 binary tree table | 0.013 | 1 | 18 |

| 171 | Exercise 3.27 memoization | 0.802 | 2 | 2 |

| 172 | Exercise 3.28 primitive or-gate | 1.316 | 2 | 783 |

| 173 | Exercise 3.29 Compound or-gate | 0.001 | 1 | 2 |

| 174 | Exercise 3.30 ripple-carry adder | 0.009 | 1 | 13 |

| 175 | Exercise 3.31 Initial propagation | 0.013 | 1 | 18 |

| 176 | Exercise 3.32 Order matters | 0.007 | 1 | 10 |

| 177 | Exercise 3.33 averager constraint | 9.460 | 3 | 198 |

| 178 | Exercise 3.34 Wrong squarer | 0.042 | 1 | 61 |

| 179 | Exercise 3.35 Correct squarer | 0.012 | 1 | 17 |

| 180 | Exercise 3.36 Connector environment diagram | 3.319 | 3 | 263 |

| 181 | Exercise 3.37 Expression-based constraints | 0.037 | 1 | 53 |

| 182 | Exercise 3.38 Timing | 0.061 | 1 | 88 |

| 183 | Exercise 3.39 Serializer | 1.266 | 4 | 269 |

| 184 | Exercise 3.40 Three parallel multiplications | 5.973 | 3 | 332 |

| 185 | Exercise 3.41 Better protected account | 4.229 | 2 | 97 |

| 186 | Exercise 3.42 Saving on serializers | 0.023 | 1 | 33 |

| 187 | Exercise 3.43 Multiple serializations | 0.040 | 1 | 58 |

| 188 | Exercise 3.44 Transfer money | 0.005 | 1 | 7 |

| 189 | Exercise 3.45 new plus old serializers | 0.004 | 1 | 6 |

| 190 | Exercise 3.46 broken test-and-set! | 0.007 | 1 | 10 |

| 191 | Exercise 3.47 semaphores | 1.044 | 2 | 53 |

| 192 | Exercise 3.48 serialized-exchange deadlock | 0.022 | 1 | 31 |

| 193 | Exercise 3.49 When numbering accounts doesn’t work | 0.008 | 1 | 11 |

| 194 | Exercise 3.50 stream-map multiple arguments | 0.317 | 3 | 96 |

| 195 | Exercise 3.51 stream-show | 0.007 | 1 | 10 |

| 196 | Exercise 3.52 streams with mind-boggling | 0.034 | 1 | 49 |

| 197 | Exercise 3.53 stream power of two | 0.016 | 1 | 23 |

| 198 | Exercise 3.54 mul-streams | 0.005 | 1 | 7 |

| 199 | Exercise 3.55 streams partial-sums | 0.013 | 1 | 18 |

| 200 | Exercise 3.56 Hamming’s streams-merge | 0.015 | 1 | 21 |

| 201 | Exercise 3.57 exponential additions fibs | 0.007 | 1 | 10 |

| 202 | Exercise 3.58 Cryptic stream | 0.010 | 1 | 14 |

| 203 | Exercise 3.59 power series | 0.422 | 2 | 30 |

| 204 | Exercise 3.60 mul-series | 0.048 | 1 | 69 |